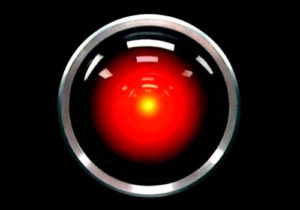

AI is rapidly emerging as an inevitable component of developing technologies. One may hardly point to any industry that is not already seeking to adopt the advancements in computational intelligence: from advertising to autonomous vehicles, from medical diagnosis to stock trading, from video games to missile defense and cyber-security, all strive to exploit AI techniques in the very near future. In the midst of this intensifying hype, a growing concern is whether AI can be trusted with the many critical tasks that are sought to be performed by smart machines? How can we ensure that our self-driving car never decides to rush its passenger off a cliff? How can we guarantee that autonomous military drones cannot be manipulated to target friendlies? Is there any way to prevent the intelligent teddy bears of the future from developing aggressive mental disorders and harming children? Can we implement a Skynet that never embarks on destroying the human race, or a post-Turing-test HAL 9000 that may never think of killing its companions?

The AI Safety Research Initiative aims to work towards laying concrete foundations for the safety and security of intelligent machines with both theoretical and engineering perspectives. Our research aspires to develop comprehensive models, metrics, frameworks, and tools for analysis, implementation, and mitigation of deleterious behaviors in AI systems.